Back in December 2019, we came up with the idea of using chat-bots' identification capabilities to find customer leads[1] in social media posts. Our reasoning behind it was that, in general, leads from social media follow some kind of pattern usually stating that they were looking to get a job done, preferably with some specific technology.

Eg.: We are looking for Laravel developers interested in building and maintaining a system destined to be used by fortune 500 companies.

We figured this kind of pattern could be easily picked up by a Machine Learning model, such as the one that chat-bots, use to recognize and understand natural human language.

Building an MVP

Initially, we weren't sure if the idea was going to work so we decided to be agile and validate it with a minimum viable product (MVP). If we were going to fail, we were going to fail fast minimizing time and money spent, but if the idea showed to be somewhat fruitful, we would carry on motivated towards turning it into a more robust solution.

Our first MVP consisted of only using Tweets as a source for potential leads and Laravel[2] as a keyword to filter relevant Tweets. We already had some expertise in chat-bots since we built one for a laboratory to help it respond to doubts about medicines, so we quickly created one with the help of IBM's Watson Assistant. Now all that remained was to feed it with information so that it could recognize potential customers... That was no easy task though because the underlying Machine Learning, model usually needs TONS of data, and there was no readily available source of tweets that represented potential customers to us

Training

We tried using some examples we had in hand and even built our own, but we quickly realized that it wasn't going to be enough. Hence, we came up with the idea of a training infrastructure for our bot, capable of feeding it lots of information (be it examples and counter examples of leads).

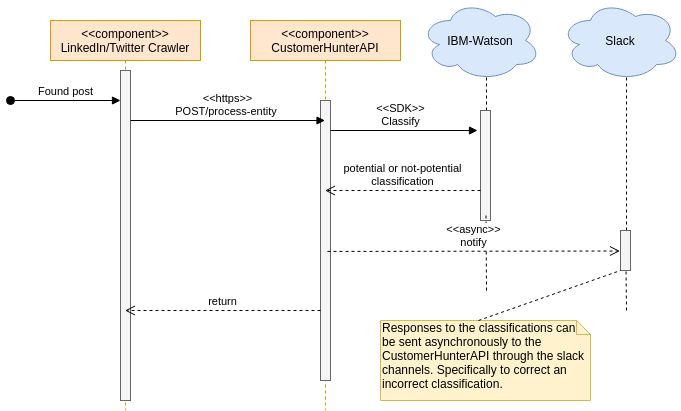

We initially tried to separate the concepts of training and classification of Tweets into "potential" and "not potential" leads. We later worked out that we could classify and train at the same time by simply letting our bot classify the Tweets and if at some point it did an incorrect classification, we would simply correct it so that it could learn from its mistakes (just like humans do).

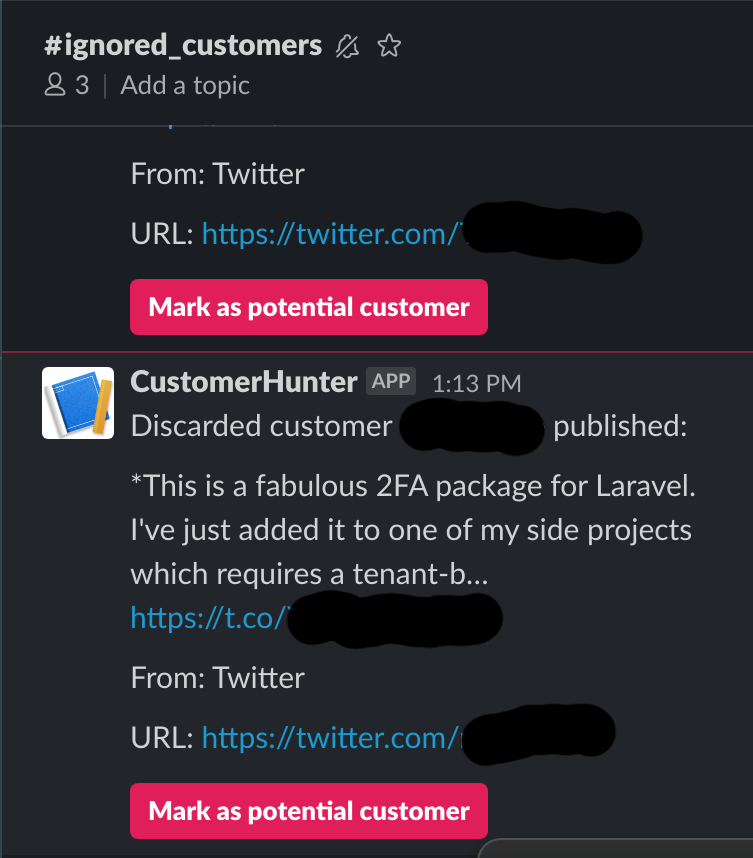

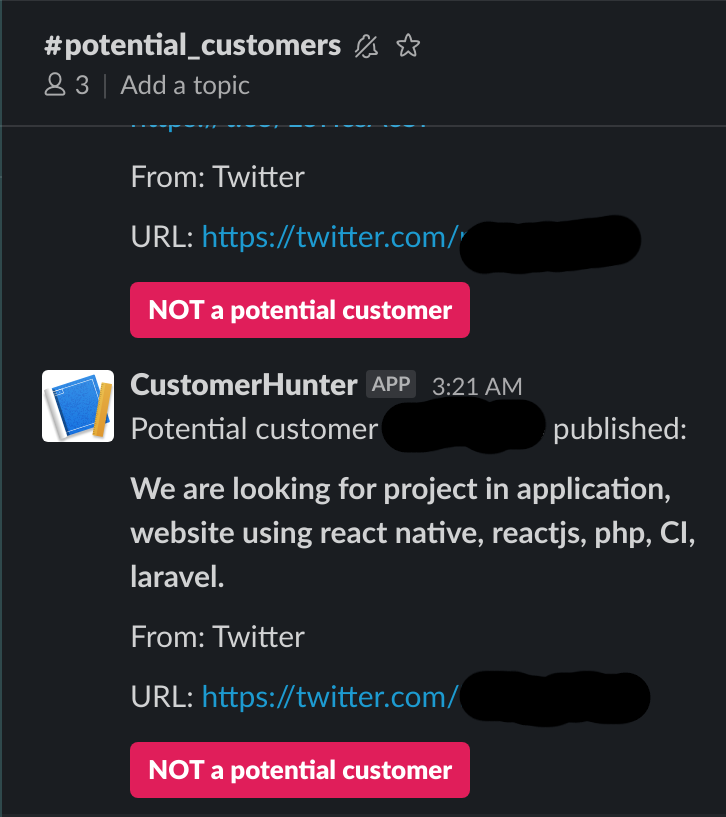

After a bit of research, we found that we could use Slack as an interface for the bot's classification and training. We built a Slack app that spanned two channels, one for potential leads and one for irrelevant leads. Whenever a tweet is processed, it is sent to the Slack app with the source of the information (Light-it's potential customer), and the information itself, so that we can decide whether it is a correct classification and contact that potential lead if it was in fact a lead.

The MVP showed signs of improvements over time in terms of classification capabilities, slowly but surely, it recognized more potential customers with better accuracy, less false positives, and less false negatives. That's when we decided to transition into a more robust product.

Extending the MVP

Given the success of our MVP and the fact that it completed our commercial department, we decided to include LinkedIn to the mix, which is the ideal social network for lead generation. At LinkedIn people and companies are constantly offering and searching for jobs. They are all seeking for some kind of growth, as we are. We want to connect with potential leads for our mutual benefit and LinkedIn's mission couldn't show more support towards this:

Connect the world's professionals to make them more productive and successful.

Integrating LinkedIn meant generalizing some of the existing logic, but since we made the system knowing this was a possibility, the impact was minimal. The real challenge was building a LinkedIn crawler to search for posts. This alone was almost as big of a challenge as the MVP itself since there are no public APIs that we could use. We either had to do it by hand or use some kind of program to simulate "doing it by hand" (read Selenium).

Using Selenium

The challenge of using Selenium to simulate browsing LinkedIn wasn't very big, or so we thought. Actually getting to the information that we wanted and parsing it wasn't hard at all. The problem came out later, when we deployed the crawler to production. It wouldn't work and we just couldn't understand why.

We didn't have access to a UI in our server, which meant that it was very hard to debug. We ended up having to print the raw HTML to the standard output and hoping everything would load correctly when viewing it locally at our machines. It turned out that LinkedIn was asking for a verification code they had sent us by email.

Two Factor Authentication

This was very hard for us to solve since this kind of challenge is specifically designed to avoid people having bots as we do. However, after a lot of brainstorming, the idea of using two-factor authentication came up. The idea of using 2FA came from the fact that we could have better control over the codes being sent to us without the need to access our email and parsing it to get the code, we just needed to make our own version of Google Authenticator. Easy, right?

It may sound hard, but to our surprise, it was actually pretty easy to do. The research revealed that Google Authenticator uses a well-known algorithm, meaning that there are some public implementations of it that we could download and use. With the algorithm in hand, we created our own Two-Factor authenticator service, so we registered our LinkedIn account to it and everything began to work smoothly...

Captcha Challenge

That was until the crawler started crashing out of nowhere, and it turned out to be because LinkedIn was challenging us to solve another kind of challenge: a Captcha.

This turned out to be a pain in the neck, there was no feasible way of solving it aside from an actual robot trained to click and solve the challenge as seen in the gif. The only workaround we found so far is to get notified through Slack by the bot whenever there's a captcha challenge (which luckily has only happened once) so that we can go solve it ourselves by hand.

Conclusion

All in all we are quite happy with the outcome of the project and we are eager to see the opportunities this bot can uncover for us. I personally had lots of fun working on this project and solving so many interesting technical challenges, it certainly left a lot of very valuable lessons for me and the team.